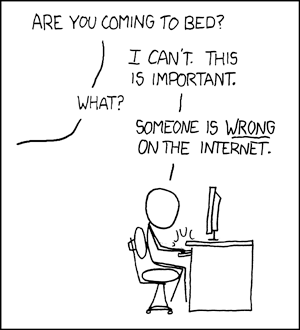

I was recently involved in a small internet spat on LinkedIn with someone who confidently proclaimed the failure of Bayesian statistics and machine learning because, according to them, Bayesianism is inherently subjective. Since I, like many, suffer from Someone-is-wrong-on-the-internet syndrome, I pointed out that this simply isn't true, that there are quite objectivist strains of Bayesianism at the ready, and pointed him to relevant scholars. As usually happens in these sorts of spats, rather than looking at the provided evidence, he replied back noting the existence of well-(re)known subjectivist Bayesian Leonard Savage as the authority on the subject of Bayesianism as a whole. (They also had a weird hatred of model averaging and tried to blame that on Bayesianism too, which ended up being a clue to disengage.)

As I recall, I. J. Good once wrote about the existence of at least 46656 different Bayesianisms [2], so the claim that someone should take Savage, an admitted powerhouse of a subjective Bayesian, as the sole determiner of what Bayesianism must be in its essence is rather guffawingly stupid, especially from, presumably, a practicing statistician, analyst, or machine learning engineer. But such attitudes are readily repeated by well-known statisticians [3] that, in my opinion and the opinions of other practicing Bayesian statisticians [4], ought to know much better.

But let's start with what Savage has to say in The Foundations of Statistics, since it's informative.

Savage Subjectivism

Now, I don't recall Savage denying the existence of objectivist Bayesians much less providing a convincing argument to shut down the possibility objective Bayesianism entirely, though he does have some gripes with what he calls "objectivist" interpretations of probability.

The difficulty with the objectivistic position is this. In any objectivistic view, probabilities can apply fruitfully only to repetitive events, that is, to certain processes; and (depending on the view in question) it is either meaningless to talk about the probability that a given proposition is true, or this probability can only be 1 or 0. Under neither interpretation can probability serve as a measure of trust to be put in the proposition. Thus the existence of evidence for a proposition can never, on an objectivistic view, be expressed by saying that the proposition is true with a certain probability. [5]

This would seem to bolster the interlocutor's point about the essential subjectivity of Bayesianism. However, it's worthwhile to pay attention to some immediately prior context that bears on interpreting exactly what Savage is saying here. On the earlier page Savage makes a course-grained classification of interpretations of probability into Objectivistic, Personalistic, and Necessary views. Objectivistic views, for Savage, are described as follows:

Objectivistic views hold that some repetitive events, such as tosses of a penny, prove to be in reasonably close agreement with the mathematical concept of independently repeated random events, all with the same probability. ... [T]he evidence for the quality of agreement between the behavior of the repetitive event and the mathematical concept ... is to be obtained by observation of some repetitions of the event, and from no other source whatsoever. [6]

This is important context. By "Objectivistic" here, Savage is talking about frequentism, the view that probability is concerned with, broadly speaking, empirical frequencies of events. This is particularly clear in contrast with the Personalistic and Necessary views that he describes as follows:

Personalistic views hold that probability measures the confidence that a particular individual has in the truth of a particular proposition... These views postulate that the individual concerned is in some ways "reasonable", but they do not deny the possibility that two reasonable individuals faced with the same evidence may have different degrees of confidence in the truth of the same proposition.

Necessary views hold that probability measures the extent to which one set of propositions, out of logical necessity and apart from human opinion, confirms the truth of another. They are generally regarded by their holders as extensions of logic ... [7]

Here, note that Savage introduces the notion of "degrees of confidence", also known as "degrees of belief", in his characterization of Personalistic views. This is a statement about Probabilism, a claim that rational belief comes in degrees that accord with the laws of probability. [8] This is very commonly understood to be a broad, minimally defining characteristic of Bayesianism. [9] And, perhaps unfortunately for Savage's initial characterizations here, the Personalistic and Necessary views are not mutually exclusive. (To be fair, he does later in the book express a similar point -- we'll discuss that in a moment.)

It's clear from context that Savage, at least initially, intends his Personalistic characterization to refer to what we would call a more subjectivist set of views where there are very few postulated constraints on the rationality of a person's credences, or belief probabilities. But this doesn't follow merely from the use of probability to measure an individual's confidence nor from the possibility that rational individuals may diverge upon seeing the same evidence. It does, however, follow from the postulation that an individual's confidence has very few, if any, rational constraints beyond probabilism itself.

The characterization that others may walk away with different conclusions upon seeing the same evidence does not make the theory subjectivist either. After all, we can consider the deductive context where it's often the case that one person's modus ponens is another person's modus tollens -- a quip that I'd like to think is original but probably isn't [9b]. If person A believes that God exists, and person B believes that unjustifiable evil exists, then if they both come to believe that, "If God exists, unjustifiable evil does not exist," then person A will conclude that unjustifiable evil doesn't exist while person B will conclude that God does not exist. If this divergence in conclusions fails to make deductive logic subjective, then neither does it make Bayesianism.

Another way to understand Savage here is to say that it's possible (and rational) that two people look at the same evidence and rationally disagree fundamentally about how that evidence should affect their beliefs. As an extreme example, one possibility under this understanding is that two people, A and B, arrive to a table where they observe 100 coin flips of the same coin, gathering counts of Heads and Tails. Let's say that the recorded results are 63 Heads, 37 Tails. Person A could say that their subjective credences are set up in such a pathological way that the conditional probability of observing 63 Heads out of 100 just proves, with certainty, for them that the coin is fair. This can diverge from Person B who assesses, more modestly, that they now believe the coin's bias is closer to 0.63 than they had previously believed. [10]

Now, degrees of belief that are untethered to what is suggested by evidence is deemed irrational by most -- there are few strictly subjectivist Bayesians in contemporary epistemology [11], though Savage (along with de Finetti [12]) could be said to be at or near this extreme. To Savage's credit, though, he states later in The Foundations of Statistics that:

All views of probability are rather intimately connected with one another. For example, any necessary view can be regarded as a personalistic view in which so many criteria of consistency have been invoked that there is no role left for the person's individual judgement. ...

From a different standpoint, personalistic views lie not between, but beside, necessary and objectivistic views; for both necessary and objectivistic views may, in contrast to personalistic views, be called objective in that they do not concern individual judgement. [13]

It seems clearer here that Savage sees the contrast between Personalistic views per se' and Objectivist / Necessary views circumscribed by the degree of freedom that individual, personal judgement enters into the fray. The more constraints on rational belief, the fewer degrees of freedom for individual judgement, and the more similar such Personalistic views become to Objectivist and Necessary views.

So, even by Savage's lights, one can posit rational constraints on belief beyond Probabilism, even so as to render no role for personal judgement by which subjectivism can enter, and still count as a Personalist. In my opinion, this shows the Personalist/non-Personalist distinction to turn on an ontological (or perhaps even a merely linguistic) question the answer to which may have little practical value. If one subscribes to a sufficiently constrained Personalism, so as to make the judgements identical to a Necessary view, then the question reduces to the ontological question of whether probability is grounded in (perhaps ideal) psychological facts or some logical property of evidence as expressed in a language.

What does all this have to do with objective Bayesianism?

As mentioned before, Bayesianism is understood generally to be a collection of views that minimally subscribe to Probabilism, that inference, broadly speaking, follows the rules of probability. [9] This is a large tent that includes very subjectivist-leaning views, allowing for a large role of personal judgement in probability, to more objectivist-learning views, which restrict the role of personal judgement and constrain belief to accord with empirical evidence. Indeed, for Jon Williamson, a fully objective Bayesianism leaves essentially no role for individual judgements of the kind Savage wished to include in his subjectivist theory. [14]

Williamson has explicated what he considers to be the three, key norms in determining a thoroughgoing, objective Bayesianism (which he calls, unsurprisingly, Objective Bayesianism).

Probability: An agent's degrees of belief should be representable by probabilities. ...

Calibration: An agent's degrees of belief should be appropriately constrained by empirical evidence. ...

Equivocation: An agent's degrees of belief should be as middling as these constraints permit. ... [15]

Under this characterization [16], extreme subjectivist Bayesians like de Finetti [12] accept only the Probability norm. Frequentists such as John Venn [17] or Richard von Mises [18] can be understood to accept Calibration (or, rather, a nearby principle that doesn't refer to degrees of belief) but reject Probability and Equivocation. Proponents of "classical" or "logical" probabilities like Laplace [19] and J. M. Keynes [20] focused more on Probability and Equivocation than on Calibration. [21] Continuing from Williamson, it isn't until figures like E. T. Jaynes [22] and R. D. Rosenkrantz [23] that these three norms come back together in a contemporary, objective Bayesianism. [16]

Statistics: Subjective Bayes to Objective-ish Bayes

Arguably, one of several reasons why Bayesianism continues to be characterized in purely subjectivist terms is because most Bayesian statisticians make use of techniques that are primarily interpretable in subjectivist terms. As Williamson points out, practicing Bayesian statisticians typically eschew an explicit Calibration strategy in their inference procedures. [24] Even if they take on the Equivocation norm by using a maximum-entropy prior or a weakly-informative reference prior or what Williamson calls the "pretend prior" strategy, the failure to properly employ a Calibration procedure removes important guardrails that, as discussed above, properly bound rational degrees of belief for the objective Bayesian.

Some Bayesian statisticians and practitioners appear to recognize something like this. Andrew Gelman, for example, argues for the practice of "posterior predictive checks" for the Bayesian statistician. [25] This sort of procedure resembles an adherence to Calibration in the model evaluation phase of statistical analysis: models that fail to produce posteriors that sufficiently resemble the empirical data should be suspect.

In what I'll call the Gelmanian view, model prior distributions represent neither a state of belief (as a subjectivist might have it) nor a state of ignorance (as on a more objective view). Rather, the prior distribution acts as yet another model choice, along with the structure of the likelihood. As such, if a fit Bayesian model is rejected based on posterior predictive checks or other practical defects after its production, the model structure, including the model's prior distribution, is open for adjustment.

More subjectivist Bayesian statisticians, as well as some frequentists [26], have articulated that this process of assessing model fit by evaluating its post hoc frequency properties seems somewhat un-Bayesian. Furthermore, using data to re-condition the model after producing an inference violates Bayesian conditioning.

The standard Bayesian recipe in statistics is to settle on an appropriate prior distribution for your model and conduct inference. But unless you think, independently of your particular data, that your prior distribution was mistaken, it's an error to revise your prior once inference is conducted. Much more severe in that case is using the data to produce a revised prior distribution to manufacture a posterior that targets that data. If "Empirical" Bayesians [27] already got flack for "double-dipping" their data, it seems like such charges should apply here.

However, if we understand the Gelmanian procedure in terms of the three Williamsonian norms (recall Probabilism, Calibration, Equivocation), we can see that this procedure does aim at something like Calibration. [28] It may violate strict Bayesian conditionalization [29], but it's in service of a sensible norm that operative on the epistemic level. This is a point that, as we will see in a moment, agrees with the objective Bayesian. [30]

Statistics, Epistemic Norms, and Frequentism

Williamson provides what seems to me a quite plausible and explanatorily helpful breakdown of how this sort of subservience of statistics to epistemology works first by means of an articulation of what the conflict is between the statistical goals of Bayesianism and frequentism.

Different kinds of statistical practice can be understood as implementations of a general practical schema. [31, next three sections]

General Statistical Scheme

Conceptualize the problem and isolate a set of models for consideration. Typically these are something like probability functions.

Gather evidence.

Apply statistical methods to evaluate the models in step 1 in light of the evidence from step 2. Inferences will be made from the subset of models that are appropriate and satisfactory.

Frequentist Statistical Scheme

Conceptualize the problem. The set of models under consideration are a set of candidates representing the physical probability of a data generating process. Physical probability, here, is usually understood in a usual frequentist way. (Venn, Von Mises, etc).

Gather evidence.

Apply statistical methods to these models. Models are acceptable for inference only in the case that they make the evidence gathered sufficiently likely.

Bayesian Statistical Scheme

Conceptualize the problem. Choose a set of models and a prior function defined over that set. This prior function is usually understood as a belief function representing degrees of belief and is defined for single cases rather than frequentist repetitions.

Gather evidence, ensuring that it is representable in the domain of the prior distribution.

Adopt a new belief function that is the conditioning of the prior distribution with the newly gathered evidence, usually with something like Bayesian conditioning / Bayes' Rule. Then, models are acceptable for inference in the case that they have sufficiently high posterior probabilities according to the revised belief function.

Here we can directly locate the apparent conflict between frequentist and Bayesian statistics, as has been done many times before, but it's articulated here as a model selection problem with conflicting acceptance criteria. The goal of a frequentist statistics is to perform inference by selecting models that track objective chance as a (broadly) physical property of a data generating mechanism. The goal of a Bayesian statistics, however, is to determine what is rational to believe after receiving data (presumably from a data generating mechanism). They're about two different things: one is about physical properties and the other is about rational belief, presumably as the basis for action (betting, for example). And if these goals are pit head-to-head, as they often are, then there's sure to be conflict about which goal we're really after in our inference, leading to, in my opinion, absurd accommodationist views that freely take up frequentism or Bayesianism as the mood suits them with little regard for what rationality requires. [32]

For Williamson, this conflict is not necessary, as Bayesianism is, at heart, an epistemological thesis. As such, we can ascend Bayesian constraints to the epistemological level and actually cooperate with frequentist methods to solve relevant subproblems. If successful, it provides a cooperative scheme between frequentism and Bayesianism that avoids the moody mutability of accommodationism.

A Sketch of Williamsonian Objective Bayesian Statistics

In this section, I'll largely refer the mathematical expositions to Williamson 2013, not the least reason being the cost-benefit of reproducing the characterizations with MathJax is not my jam today, so I'll paraphrase the details that I consider relevant. It's also worth noting that Williamson 2013 prefers to explicate the theory as a theory on sentences as opposed to a theory on sets. This is common in the philosophical literature, and we can be assured [33] that there's very little that rests on this choice -- feel free to translate the into more familiar terms if you'd like. [34]

The Epistemic Norms Explicated

Williamson's explication of the Probability norm is straightforward, and he points out that this minimizes sure loss, as arises in Dutch Book arguments. [35]

His explication of Calibration is also plausible by my lights, though it perhaps needs some discussion here. For him, Calibration constrains evidence-informed belief functions to a particular convex hull consisting of the linear interpolations between the set of candidate physical probability functions. If there are additional structural constraints on rational belief that are not mediated by physical probabilities, then those apply at this step as well. (The paper assumes none and moves on.) Williamson points out in his related lecture that Calibration avoids almost-sure long-run loss [35], but continues in the paper to argue that Calibration is also related to avoiding worst-case expected loss. His Calibration norm is related to, and intended to be a generalization of, David Lewis' Principal Principle [36], which states, in essence, that if we know the objective chances of something being the case, then ceteris paribus our degrees of belief about that thing being the case should match the objective chances. Williamson's formulation has the benefit of handling general constraints on physical probability where Lewis' formulation does not. [37]

Finally he explicates Equivocation. Recall that the gloss for Equivocation was that an agent's beliefs should be as middling or non-committal as possible subject to the previous constraints of Probability and Calibration. This is intended to capture the motivations behind ideas like the Laplace's Principle of Indifference or Jaynes' Principle of Maximum Entropy: we should, in some sense, be maximally unsure about data we do not yet have. [38, 39] This is captured by means of identifying an equivocator, a probability function that maximally equivocates between the atomic events or propositions in the space, and optimizing our evidence-enriched belief function toward that equivocator by means of minimizing the Kullback-Leibler Divergence [40] from it to the proposed belief function. (My understanding is that in general the equivocator is a maximum-entropy belief function.) Williamson [35] notes that Equivocation minimizes worst-case expected loss.

Williamson's Objective Bayesian Inference Scheme

With those three epistemic norms operative, we can start talking about how this is all intended to operate as a statistical inference procedure. I'll summarize Williamson's explication as before. [41]

Conceptualize the problem. Choose the set of probability functions over the agent's language. (or set-based events or however you've translated the probability theory)

Gather evidence and isolate the set of models properly Calibrated to the evidence (that is, that are given by the convex hull of physical probability functions, etc...).

Note: This is the stage at which frequentist methods can be applied. This is, after all, their goal to select models that fit data.

The belief functions acceptable for action are a subset of these evidence-calibrated models. For Objective Bayesians, this is a (likely proper) subset of models that satisfy Equivocation; that is, are minimal with respect to the KL-Divergence to the equivocator.

It's interesting to note that while this inference scheme might be described, inaccurately in my opinion, as a hybrid frequentist-Bayesian view, there's no requirement to use frequentist methods in step two -- it's just great that we have a very well-developed theory of statistics that is focused specifically on Calibration and that it makes sense to use it. If there is some other statistical method that satisfies Calibration, that can be plugged in instead.

An Example

Williamson also provides a helpful extended example that I'll talk through in summary [41].

Problem Statement: An agent sits on a T-shaped road, observing whether oncoming drivers turn left or right. After 100 observations, the agent is interested in predicting whether the 101-st driver will turn left or right. Their observations indicate that over the past 100 drivers, 41 have turned right. The agent is also sufficiently confident that these observations are IID (independent and identically distributed) and that there's no weirdness with time dependence (exchangeability).

Inference:

1. Conceptualize and identify models.

The agent asks what degree of belief they would need in order to accept that the next car will turn left (L) in their current context (which also entails a belief on ~L=R, by Probabilism). Williamson uses some decision/utility theory here to estimate that for this agent, in this context, they would need a degree of belief of 0.75.

In my opinion, the integration with decision theory here is certainly a boon, but without other theoretical commitments, I think it's in principle reasonable for there to be some other strategy the agent could use here, such as deferring to a domain-specific expectation (like 95% for frequentists). The decision theoretic justification for this bound, though, strikes me as superior.

This minimum degree of belief is then used as the desired coverage level for a confidence interval. Here, Williamson refers to the usual frequentist intervals formed by means of the Central Limit Theorem, but I suspect that any strategy of producing (narrowest) intervals with the necessary coverage will do (bootstrap, conformal, etc). Unfortunately, this inverse function from coverage level (alpha) to symmetric interval distance (delta) is quite ugly, but the point for this example is that it exists and we have the interval.

2. Gather evidence and isolate Calibrated models.

Now that the agent has an actual confidence interval that has the appropriate, context-specific coverage probability, the agent Calibrates their degrees of belief accordingly. That is, since the physical chance that the next car will turn left is bounded by the calculated confidence interval with physical probability 0.75, the agent assigns 0.75 as their degree of belief that the physical probability of the next car turning left is in that interval. The resemblance to the Principal Principle here should be clear.

Now, since the agent believes to degree 0.75 that the probability of turning left is contained in the identified confidence interval, and since 0.75 was, by design, the minimum the agent would need to believe that the next car will turn left, the agent should accept that the probability of turning left is in these bounds. That is, for the purpose of the problem, we need only consider for inference the (albeit very simple) models that remain in this interval.

At this point, recall the IID and exchangeability assumptions and that, at this point, we have that the agent's belief that the next car will turn left is somewhere in the identified confidence interval, a somewhat simple intermediate result.

3. Equivocate and Infer.

Now, the agent has a set of remaining models, those consistent with the identified confidence interval, leaving them with the belief that the next car will turn left is somewhere in the confidence interval, calculated to be [0.355, 0.467]. Now, by Equivocation, they choose the point in this interval that minimizes the distance to the equivocator. In this case, the equivocator is a simple Bernoulli with parameter 0.5. This makes life easy, since 0.5 is above the upper bound of the interval, suggesting that the agent take the upper bound 0.467 to be the final, desired estimate.

All of this might sound complicated at first, but it really boils down to something like the following:

Have some set of possible physical models.

Choose an appropriate, context-specific confidence level.

Get data.

Calculate your confidence interval.

Choose the point in the interval that is closest to the equivocator.

Now this might seem too boiled down, since it seems very close to what a frequentist might say to do, but keep in mind what's being done here under the hood.

Interpretational and Procedural Benefits to Williamsonian Inference

The Williamsonian approach to Bayesian inference brings a number of benefits to frequentist inference.

First, it allows the application of probabilities to single-shot scenarios, such as the probability that the 101-st car will turn left, rather than only hypothetical repetitions of experiments involving 101 observed cars.

Second, it allows a straightforward interpretation of confidence intervals in terms of what we can reasonably believe about parameters in that interval. The common occurrence of interpreting a 95% confidence interval as saying that a parameter has a 95% chance of being in the interval now has some reasonable grounding, rather than simply being denied by fiat since parameters are not physically random.

Third, it provides for explicit, decision-theoretic considerations to drive Calibration. Scientists often fall back on a purported false positive rate of 0.05 for their frequentist statistical inference, and anyone wishing to consider alternative bounds needs to translate their practical considerations (financing, time, etc.) into a discussion about false positive rates. It's much more promising to leverage the machinery of decision theory here to deal with these sorts of practical issues, especially in industry where the tradeoffs associated with statistical criteria like false positive rates can be nearly incommunicable with business leaders. [42]

Summary and Next Steps

Overall, this blog entry was an excuse to air gripes about overconfident statisticians on social media, do a quick survey of Bayesianism, and dive into a particular theory of objective Bayesianism and how to apply it for fun and profit. I think it's interesting to see the explication of objective Bayesianism in a way that seems to evade Savage's categorization, though it might just be shoehorned under a kind of Necessary view if it came to it. It's interesting that while he allows that Personalist views can coexist beside the two other views, he doesn't seem to consider the other categories to be similar. This is interesting because there's a sense in which Objective Bayesianism can fit into all three categories in different respects: Personalist, in the sense that it has to do with Belief construed broadly, Necessary, in the sense that it's determined uniquely be the evidence (modulo some context), and Objective, in the sense that it is calibrated to empirical frequencies. Such a possibility doesn't really fit into Savage's remarks, in my opinion.

I'm also interested to consider what sorts of views might live in the undiscussed combinations of the three Williamsonian norms. We should have 7 possibilities to choose subsets (8 if you reject them all, I suppose), but we've seen subjectivist Bayesians accept Probabilism, Objectivist Bayesians accept them all, some Bayesians accept Probabilism and Equivocation, and some others accept Probabilism and Calibration. Frequentists effectively assent to Calibration alone. Which views might, for example, just accept Equivocation, or just Calibration and Equivocation? Is there a way to make sense of them? I suspect not, but it's something to consider.

I think my next steps will be to spend some more time with Williamson, particularly his actual book on his brand of Objective Bayesianism, then look to apply methods like these to contexts in machine learning, particularly with the hype around conformal prediction sets in the ML world. I'm nearly always a fan of seeing Bayesianism in practice, and it's been disappointing to consistently see the computational challenges associated with fully Bayesian inference. I'd like to see if Williamson's ideas provide a practical computational advantage in the ML landscape, though I'm a bit fuzzy about how we might get full distributional outputs, as Bayesians are to wont; gotta love that Bayesian compositionality.

Footnotes:

[1] Munroe 2008

[2] Good 1983

[3] Mayo 2013

[4] Gelman 2014

[5] Savage 1972, p. 4

[6] Savage 1972, p. 3

[7] Savage 1972, p. 3

[8] Lin 2023, Section 3.

[9] Weisberg 2011, "Loosely speaking, a Bayesian theory is any theory of non-deductive reasoning that uses the mathematical theory of probability to formulate its rules."

[9b] This is the same idea at the heart of "Moorean shifts", named after G. E. Moore and his "commonsense" defense of external-world realism.

[10] Williamson 2013 has a similar example of an agent who has (total) evidence that the chance of a certain particle decaying is 0.01 yet who believes the particle will decay to degree 0.99.

[11] Williamson 2013. Williamson also goes on to suggest an interesting relationship to Moore's Paradox, a puzzle regarding apparently irrational statements of the form, "It is the case that P, but I do not believe that P," or "P & B(~P)" where B is a predicate indicating belief.

[12] de Finetti 1937/1980 and de Finetti 1970/2017. de Finetti was well known for uncompromisingly subjectivist views on probability and statistics.

[13] Savage 1972, p. 60

[14] Williamson 2011

[15] Williamson 2011

[16] Williamson 2011

[17] Venn 1888

[18] von Mises 1939

[19] Laplace 1825/1902

[20] Keynes 1921/2016

[21] Recall that these views would have been called Necessary views by Savage.

[22] Jaynes 2003. Jaynes' work on objectivist inference inspired by concepts from information theory go back to at least 1957, where he introduces the Principle of Maximum Entropy. See Jaynes 1957.

[23] Rosencrantz 1977

[24] Williamson 2013

[25] Gelman et al 2013, p 141: "A good Bayesian analysis... should include at least some check of the adequacy of the fit of the model to the data and the plausibility of the model for the purposes for which the model will be used."; p 150: "...[W]e use predictive checks to see how particular aspects of the data would be expected to appear in replications."

[26] Deborah Mayo comes immediately to mind, but there are others.

[27] Casella 1985

[28] Gelman 2011: "... Bayesian probability, like frequentist probability, is except in the simplest of examples a model-based activity that is mathematically anchored by physical randomization at one end and calibration to a reference set at the other."

[29] Conditionalization is commonly taken to be a central feature of Bayesian epistemology (Pettigrew 2020). However, I agree with Williamson (2011) that Conditionalization cannot be the whole story that Bayesians tell about updating beliefs. Given that there are more constraints on belief than just probabilism, it doesn't surprise me that we find that, in edge cases, conservative Bayesian conditionalization can go quite wrong, and it's enough that, under non-pathological cases where we expect Bayes' Rule to apply, that it does so reasonably, and that when it doesn't, other constraints play a role in explaining that failure and recommending the more reasonable belief.

Taking this a bit further, we can note Bayes' Rule as an ideal, or perhaps meta-norm, that governs the choice of update rules rather than the update norm itself. This view seems reasonable to me and seems to be instantiated by what I'll call indirect Bayesian inference procedures such as Cross-Entropy Minimization (MinXEnt) (deBoer 2005), also known as Minimum Discrimination Information (MDI) by Kullback 1959/1968), which identify Bayesian posteriors as the minimizing the cross entropy from prior to posterior under constraint of the data. These information theory-derived methods differ from the usual Bayesian statistical methods as Bayes Rule acts as a constraint on a kind of optimization rather than as a calculation rule involving specification of priors and likelihoods. Indeed, in my experience, information theory tends to be a common field from which more objective Bayesians come to harvest.

[30] I should state, however, that I don't suspect that Williamson would agree with this exact Gelmanian practice, though. It resembles somewhat the "pretend prior" view that he describes earlier. I don't think that Gelmanianism is directly in Williamson's crosshairs with his complaints about post hoc, data-driven priors being too flexible to be replacements for real Calibration since Gelmanian practice holds these priors accountable to the empirical data via posterior predictive checks. Nonetheless, Williamson has his own strategy with an eye on explicit calibration during, not after, the modeling phase which, to me, seems a bit better motivated from an epistemological standpoint -- rather than hoping that post-hoc statistical checks will be enough to satisfy Calibration, just build explicitly it in to the inference.

[31] Williamson 2013

[32] As an example, consider popularizations like Kozyrkov 2020 where the choice between frequentism and (subjectivist) Bayesianism is cast a matter of framing "what you want to change your mind about" without any discussion of epistemology.

[33] Weisberg 2011

[34] The beginning of Williamson's presentation in Williamson 2013b was helpful in understanding how inference is implemented as a loss-minimization problem subject to constraints conforming to the Objective Bayesian norms. (Graphic at ~2:38)

[35] Williamson 2013b at approximately 3:25. In Williamson 2013, he refers to worst-case expected loss here. I prefer the lecture's breakdown of three different losses for the three different norms.

[36] Lewis 1987. The Principal Principle states, in summary, that if, ceteris paribus, we believe the objective chances of an event to be a particular quantity, then our degree of belief in that event happening should equal the same quantity. See also [11].

[37] This, at least numerically, resembles the Convexity constraint discussed by Weisberg 2011 p. 10 in the context of ambiguation and indeterminate probability. To my mind, it's not a surprise that convexity would show up in both contexts since there are apparent similarities between reasoning about the density-free reasoning over confidence intervals and the density-free reasoning over indeterminate probabilities.

[38] Without the Calibration norm, Equivocation can lead to some strange results for the empirically-minded Bayesian, including problematic dependence on how a problem is posed before performing the Equivocation. [33] In my understanding, Calibration helps with this problem, because if the problem posed changes basis (or vocabulary), the appropriate Calibration constraint will also transform accordingly before applying Equivocation.

[39] One common trope demonstrating a misuse of Equivocation takes the form of an explication about something happening in the future: "No matter what, it either will happen or it won't. It's all 50%." A colleague of mine believes that Rush Limbaugh even once said something to this effect. I don't doubt it, though I am in doubt that Rush Limbaugh is, or ever had, rational beliefs in the first place.

[40] Kullback and Leibler 1951, a common measure of statistical "distance" between distributions. This isn't a true distance, in the metric sense, because of it's asymmetry, so the direction of the difference is important. The KL-Divergence appears in Bayesian inference as the amount of entropy lost by moving from a prior distribution to a posterior. See Burnham and Anderson 2002, p. 51.

[41] Williamson 2013

[42] It's worth taking a moment here to realize why Wasserman's remark quoted in [3] that objective Bayesian inference is just frequentism in Bayesian clothing is off the mark.

References:

Bernardo, J. M., Smith, A. F. M. (2009). Bayesian Theory. Germany: Wiley. (Original work published 1994)

Burnham, K. P., & Anderson, D. R. (Eds.). (2002). Information and Likelihood Theory: A Basis for Model Selection and Inference. In Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach (pp. 49–97). doi:10.1007/978-0-387-22456-5_2

Casella, G. (1985). An Introduction to Empirical Bayes Data Analysis. The American Statistician, 39(2), 83–87. https://doi.org/10.2307/2682801

de Boer, P.-T., Kroese, D. P., Mannor, S., & Rubinstein, R. Y. (2005). A Tutorial on the Cross-Entropy Method. Annals of Operations Research, 134(1), 19–67. doi:10.1007/s10479-005-5724-z

de Finetti, B. (1980). Foresight: Its Logical Laws, Its Subjective Sources. In H. E. Kyburg, Jr. & H. E. Smokler (Eds.), Studies in Subjective Probability (pp. 53–118). Robert E. Krieger Publishing Co., Inc. (Original work published in Annales de l’Institut Henri Poincare, Vol. 7 (1937). Reprinted by permission from author and publisher Gauthier-Villars. Translated by Henry E. Kyburg Jr.)

de Finetti, B. (2017). Theory of Probability: A Critical Introductory Treatment. Wiley Series in Probability and Statistics. (Original work published 1970)

Gelman, A., Carlin, J. B., Stern, H. S., Dunson, D. B., Vehtari, A., & Rubin, D. B. (2013). Bayesian Data Analysis, Third Edition. CRC Press.

Gelman, A. (2011, April 15) Bayesian statistical pragmatism. Statistical Modeling, Causal Inference, and Social Science. https://statmodeling.stat.columbia.edu/2011/04/15/bayesian_statis_1/

Gelman, A. (2014, January 16). Against overly restrictive definitions: No, I don’t think it helps to describe Bayes as “the analysis of subjective beliefs”. Statistical Modeling, Causal Inference, and Social Science. https://statmodeling.stat.columbia.edu/2014/01/16/22571/

Good, I. J. (1983). 46656 Varieties of Bayesians. In Good thinking: The foundations of probability and its applications (pp. 20–21). University of Minnesota Press.

Hájek, Alan, (2023). "Interpretations of Probability", The Stanford Encyclopedia of Philosophy (Winter 2023 Edition), Edward N. Zalta & Uri Nodelman (eds.), https://plato.stanford.edu/archives/win2023/entries/probability-interpret/

Jaynes, E. T. (1957). Information Theory and Statistical Mechanics. Physical Review, 106(4), 620–630. doi:10.1103/PhysRev.106.620

Jaynes, E. T., & Bretthorst, G. L. (2003). Probability Theory: The Logic of Science. Cambridge University Press.

Jeffrey, R. C. (1983). The Logic of Decision. University of Chicago Press. (Original work published 1965)

Keynes, J. M. (2016). A Treatise on Probability. IP Publications. (Original work published 1921)

Kozyrkov, C. (2020, September 2) Are you Bayesian or Frequentist? [Video] https://youtu.be/GEFxFVESQXc?si=sasYD4R7RhYWWT0Q

Kullback, S., & Leibler, R. A. (1951). On Information and Sufficiency. The Annals of Mathematical Statistics, 22(1), 79–86. http://www.jstor.org/stable/2236703

Kullback, S. (1968). Information Theory and Statistics. Dover Publications. (Original work published 1959)

Laplace, Pierre Simon. (1902). A Philosophical Essay on Probabilities. Translated from the sixth French edition by F. W. Truscott and F. L. Emory. Robert Drummond Printer. (Original work published 1825 as Essai philosophique sur les probabilités)

Lewis, D. (1987). A Subjectivist’s Guide to Objective Chance. In Philosophical Papers Volume II. doi:10.1093/0195036468.003.0004

Lin, Hanti, "Bayesian Epistemology", The Stanford Encyclopedia of Philosophy (Winter 2023 Edition), Edward N. Zalta & Uri Nodelman (eds.), URL = <https://plato.stanford.edu/archives/win2023/entries/epistemology-bayesian/>.

Mayo, D. (2013, December 13) Wasserman on Wasserman: Update! December 28, 2013. Error Statistics Philosophy. https://errorstatistics.com/2013/12/28/wasserman-on-wasserman-update-december-28-2013/

Munroe, R. (2008, February 20). Duty calls. xkcd. https://xkcd.com/386

Pettigrew, R. (2020). What is conditionalization, and why should we do it? Philosophical Studies, 177(11), 3427–3463. doi:10.1007/s11098-019-01377-y

Rosenkrantz, R. D. (1977). Inference, Method and Decision: Towards a Bayesian Philosophy of Science. Reidel.

Savage, Leonard J. (1972). The Foundations of Statistics. Wiley Publications in Statistics. (Original Work published 1954)

Venn, John. (1888). The Logic of Chance. Macmillan and Co. (Original work published 1866)

von Mises, R. (1939). Probability, Statistics and Truth. Dover Publications.

Weisberg, J. (2011). Varieties of Bayesianism. In D. M. Gabbay, S. Hartmann, & J. Woods (Eds.), Inductive Logic (pp. 477–551). doi:10.1016/B978-0-444-52936-7.50013-6

Williamson, J. (2011). Objective Bayesianism, Bayesian Conditionalisation and Voluntarism. Synthese, 178(1), 67–85. doi:10.1007/s11229-009-9515-y

Williamson, J. (2013). Why Frequentists and Bayesians Need Each Other. Erkenntnis, 78(2), 293–318. doi:10.1007/s10670-011-9317-8

Williamson, J & Landes, J. (2013b, September 17-18). Objective Bayesian epistemology for inductive logic on predicate languages. [Lecture video] Fadi Akil. https://www.youtube.com/watch?v=5KFGJlQF_MI

Disclaimer: I probably got some stuff wrong, but hopefully something interesting came out of it.

Comments